Cerebras becomes the fastest host in the world for Deepseek R1, ahead of NVIDIA graphics processors with 57x

Join our daily and weekly newsletters for the latest updates and exclusive content of a leading AI coverage industry. Learn more

Cerebral systems announced today that it will host Deepseek’s breakthrough R1 artificial intelligence model of American serversPromising speeds up to 57 times faster than GPU-based solutions while maintaining sensitive data within US borders. This move comes against the background of growing concerns about the rapid development of AI and the privacy of data in China.

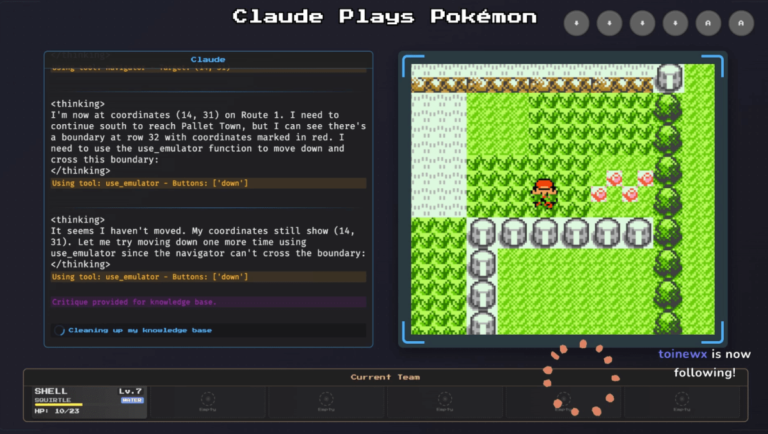

Starting AI Chip will deploy the 70-million-parameter version of Deepseek-R1 Working with his patented waffle scale hardware, delivering 1,600 to a second, dramatic improvement to the traditional GPU realizations that fought with newer AI models for “reasoning”.

Why DEPEPSEK Reflection Models Remove Enterprise AI

“These reasoning models are affecting the economy,” says James Wang, a senior CEO at Cerebras, in an exclusive interview with VentureBeat. “Every knowledge worker must generally perform some multi -stage cognitive tasks. And these reasoning models will be the tools that enter their workflow. “

The message follows a tumultuous week in which the appearance of Deepseek caused Nvidia’s the largest loss of market value, nearly $ 600 billionRaising questions about AI’s supremacy of the chip giant. Cerebras’s decision directly deals with two key concerns that have emerged: the computing requirements of advanced AI models and the sovereignty of the data.

“If you use API on DeepseekWhich is currently very popular, this data is being sent directly to China, “Wang explained. “This is a difficult warning that (makes) many US companies and businesses … do not want to consider (it).”

How the Cerebras Wafe -scale technology beats traditional graphics processors at AI speed

Cerebras achieves its speed advantage through a new chip architecture that holds entire AI models of a waffle -sized processor, eliminating narrow places in memory that blur on GPU systems. The company claims that the implementation of Deepseek-R1 matches or exceeds the operation of OPENAI’s own models while working entirely on the soil in the United States.

Development is a significant change in AI’s landscape. DeepseekFounded by former Hedge Fund CEO Liang Venfen, it shocked the industry, achieving sophisticated AI reflections, according to only 1% of the costs of US competitors. Cerebras’s hosting solution now offers US companies a way to take advantage of these advances while maintaining data control.

“It’s actually a nice story that American research laboratories have given this gift to the world. The Chinese have taken it and improved it, but there are restrictions as it works in China, there are some problems with censorship and now we return it and run it in US data centers, without censorship, without retaining data, “Wang said.

US technology guide is faced with new issues as AI innovations are running global

The service will be available through a View developers As of today. Although initially free, Cerebras plans to implement API access controls Due to the strong early search.

This move comes when US legislators are struggling with the consequences of the rise of Deepseek, which expose potential restrictions in US trade restrictions Designed to maintain technological advantages over China. The ability of Chinese companies to achieve breakthrough opportunities for AI despite Chip export control caused calls for new regulatory approaches.

Industry analysts suggest that this development can accelerate the deviation from GPU-dependent AI infrastructure. “Nvidia is no longer a leader in the presentation of the conclusions,” Wang noted, pointing to indicators showing a superb presentation from various specialized AI chips. “These other AI chips are really faster than the graphic processors to implement these most models.”

The impact extends beyond the technical indicators. As AI models are increasingly involving complex reflection options, their computing requirements have jumped. Cerebras claims that its architecture is more suitable for these emerging loads, potentially reshaping a competitive landscape in Enterprise AI implementation.